Government agencies lag in implementing AI policies

Low adoption of AI regulations among Asia-Pacific agencies raises concerns over governance and innovation.

Only 22% of large government agencies in the Asia-Pacific region have implemented AI policies, according to data from IDC. This reflects a significant gap in AI governance, posing risks to innovation and regulation, says Ravikant Sharma (Ravi), Research Director, Government Insights, IDC Asia-Pacific.

"While 86% of these agencies do recognize the value of AI regulation, only 22% of them have implemented these policies across the organisation," Sharma stated. "Additionally, 47% of these agencies have shared these policies only with key stakeholders." Sharma also highlighted that a lack of published guidelines for using proprietary data and inadequate enforcement mechanisms contribute to the slow adoption of AI policies.

Sharma pointed out that only a third of these agencies have established a committee or a board dedicated to AI governance, and of those, only 50% have appointed an executive, such as a chief AI officer, to provide oversight. "This clearly indicates that there is definitely an AI governance gap," he emphasised.

The risks of not having clear AI guidelines are significant. Sharma noted, "In the absence of this mechanism, it becomes very difficult for these agencies to justify the direction and investments in a unified sort of way." He warned that without proper AI policies, governments might resort to overly restrictive measures or even outright bans on the technology, which could stifle innovation. "If governments are banning the technology itself just for the sake of not having an AI policy, this is the most horrendous outcome," he said.

The challenge of keeping up with AI advancements while formulating policies is another critical issue. "The development of emerging technology quite often outpaces the development of policy itself," Sharma observed. To address this gap, some governments are adopting innovative approaches, such as trialing policies in controlled environments. "They are doing trials with very specific functions, such as with revenue or tax agencies, and then learning from mistakes," Sharma explained.

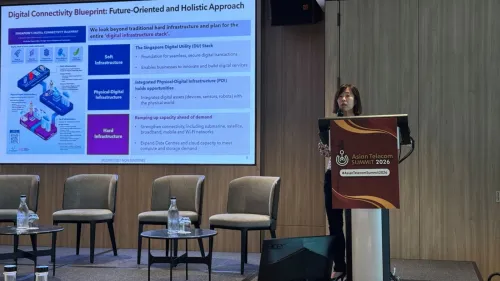

Countries like Singapore, Australia, South Korea, and India are examples of markets that are addressing generative AI usage while developing policies in real time for the public sector. "These countries are brilliant examples of how they are addressing generative AI usage while developing the policy in real time," Sharma noted.

The need for a coordinated approach, such as establishing a dedicated AI body or committee, is essential for effective AI governance. "Effective governance is key to building trust with any emerging technology," Sharma concluded, underscoring the urgency for Asia-Pacific government agencies to strengthen their AI regulatory frameworks.